Regularizers¶

Regularization in PyKEEN.

Functions¶

|

Get the regularizer class. |

Classes¶

|

A simple L_p norm based regularizer. |

|

A regularizer which does not perform any regularization. |

|

A convex combination of regularizers. |

|

A simple x^p based regularizer. |

|

A regularizer for the soft constraints in TransH. |

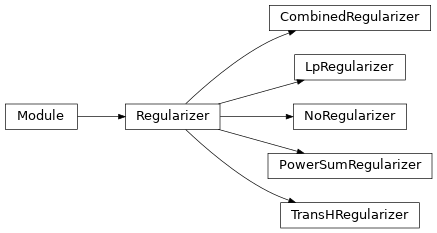

Class Inheritance Diagram¶

Base Classes¶

- class Regularizer(device, weight=1.0, apply_only_once=False)[source]¶

A base class for all regularizers.

Initializes internal Module state, shared by both nn.Module and ScriptModule.

- apply_only_once: bool¶

Should the regularization only be applied once? This was used for ConvKB and defaults to False.

- abstract forward(x)[source]¶

Compute the regularization term for one tensor.

- Return type

FloatTensor

- classmethod get_normalized_name()[source]¶

Get the normalized name of the regularizer class.

- Return type

- hpo_default: ClassVar[Mapping[str, Any]]¶

The default strategy for optimizing the regularizer’s hyper-parameters

- regularization_term: torch.FloatTensor¶

The current regularization term (a scalar)

- property term: torch.FloatTensor¶

Return the weighted regularization term.

- Return type

FloatTensor

- weight: torch.FloatTensor¶

The overall regularization weight