Loss Functions¶

Loss functions integrated in PyKEEN.

Rather than re-using the built-in loss functions in PyTorch, we have elected to re-implement

some of the code from pytorch.nn.modules.loss in order to encode the three different

links of loss functions accepted by PyKEEN in a class hierarchy. This allows for PyKEEN to more

dynamically handle different kinds of loss functions as well as share code. Further, it gives

more insight to potential users.

Throughout the following explanations of pointwise loss functions, pairwise loss functions, and setwise loss functions, we will assume the set of entities \(\mathcal{E}\), set of relations \(\mathcal{R}\), set of possible triples \(\mathcal{T} = \mathcal{E} \times \mathcal{R} \times \mathcal{E}\), set of possible subsets of possible triples \(2^{\mathcal{T}}\) (i.e., the power set of \(\mathcal{T}\)), set of positive triples \(\mathcal{K}\), set of negative triples \(\mathcal{\bar{K}}\), scoring function (e.g., TransE) \(f: \mathcal{T} \rightarrow \mathbb{R}\) and labeling function \(l:\mathcal{T} \rightarrow \{0,1\}\) where a value of 1 denotes the triple is positive (i.e., \((h,r,t) \in \mathcal{K}\)) and a value of 0 denotes the triple is negative (i.e., \((h,r,t) \notin \mathcal{K}\)).

Note

In most realistic use cases of knowledge graph embedding models, you will have observed a subset of positive triples \(\mathcal{T_{obs}} \subset \mathcal{K}\) and no observations over negative triples. Depending on the training assumption (sLCWA or LCWA), this will mean negative triples are generated in a variety of patterns.

Note

Following the open world assumption (OWA), triples \(\mathcal{\bar{K}}\) are better named “not positive” rather than negative. This is most relevant for pointwise loss functions. For pairwise and setwise loss functions, triples are compared as being more/less positive and the binary classification is not relevant.

Pointwise Loss Functions¶

A pointwise loss is applied to a single triple. It takes the form of \(L: \mathcal{T} \rightarrow \mathbb{R}\) and computes a real-value for the triple given its labeling. Typically, a pointwise loss function takes the form of \(g: \mathbb{R} \times \{0,1\} \rightarrow \mathbb{R}\) based on the scoring function and labeling function.

Examples¶

Pointwise Loss |

Formulation |

|---|---|

Square Error |

\(g(s, l) = \frac{1}{2}(s - l)^2\) |

Binary Cross Entropy |

\(g(s, l) = -(l*\log (\sigma(s))+(1-l)*(\log (1-\sigma(s))))\) |

Pointwise Hinge |

\(g(s, l) = \max(0, \lambda -\hat{l}*s)\) |

Pointwise Logistic (softplus) |

\(g(s, l) = \log(1+\exp(-\hat{l}*s))\) |

For the pointwise logistic and pointwise hinge losses, \(\hat{l}\) has been rescaled from \(\{0,1\}\) to \(\{-1,1\}\). The sigmoid logistic loss function is defined as \(\sigma(z) = \frac{1}{1 + e^{-z}}\).

Batching¶

The pointwise loss of a set of triples (i.e., a batch) \(\mathcal{L}_L: 2^{\mathcal{T}} \rightarrow \mathbb{R}\) is defined as the arithmetic mean of the pointwise losses over each triple in the subset \(\mathcal{B} \in 2^{\mathcal{T}}\):

Pairwise Loss Functions¶

A pairwise loss is applied to a pair of triples - a positive and a negative one. It is defined as \(L: \mathcal{K} \times \mathcal{\bar{K}} \rightarrow \mathbb{R}\) and computes a real value for the pair. Typically, a pairwise loss is computed as a function \(g\) of the difference between the scores of the positive and negative triples that takes the form \(g: \mathbb{R} \times \mathbb{R} \rightarrow \mathbb{R}\).

Examples¶

Typically, \(g\) takes the following form in which a function \(h: \mathbb{R} \rightarrow \mathbb{R}\) is used on the differences in the scores of the positive an the negative triples.

In the following examples of pairwise loss functions, the shorthand is used: \(\Delta := f(k) - f(\bar{k})\). The pairwise logistic loss can be considered as a special case of the soft margin ranking loss where \(\lambda = 0\).

Pairwise Loss |

Formulation |

|---|---|

Pairwise Hinge (margin ranking) |

\(h(\Delta) = \max(0, \Delta + \lambda)\) |

Soft Margin Ranking |

\(h(\Delta) = \log(1 + \exp(\Delta + \lambda))\) |

Pairwise Logistic |

\(h(\Delta) = \log(1 + \exp(\Delta))\) |

Batching¶

The pairwise loss for a set of pairs of positive/negative triples \(\mathcal{L}_L: 2^{\mathcal{K} \times \mathcal{\bar{K}}} \rightarrow \mathbb{R}\) is defined as the arithmetic mean of the pairwise losses for each pair of positive and negative triples in the subset \(\mathcal{B} \in 2^{\mathcal{K} \times \mathcal{\bar{K}}}\).

Setwise Loss Functions¶

A setwise loss is applied to a set of triples which can be either positive or negative. It is defined as

\(L: 2^{\mathcal{T}} \rightarrow \mathbb{R}\). The two setwise loss functions implemented in PyKEEN,

pykeen.losses.NSSALoss and pykeen.losses.CrossEntropyLoss are both widely different

in their paradigms, but both share the notion that triples are not strictly positive or negative.

Batching¶

The pairwise loss for a set of sets of triples triples \(\mathcal{L}_L: 2^{2^{\mathcal{T}}} \rightarrow \mathbb{R}\) is defined as the arithmetic mean of the setwise losses for each set of triples \(\mathcal{b}\) in the subset \(\mathcal{B} \in 2^{2^{\mathcal{T}}}\).

Functions¶

|

Check if the model has a marging ranking loss. |

|

Check if the model has a NSSA loss. |

Classes¶

|

Pointwise loss functions compute an independent loss term for each triple-label pair. |

|

Pairwise loss functions compare the scores of a positive triple and a negative triple. |

|

Setwise loss functions compare the scores of several triples. |

|

A module for the numerically unstable version of explicit Sigmoid + BCE loss. |

|

A module for the binary cross entropy loss. |

|

A module for the cross entopy loss that evaluates the cross entropy after softmax output. |

|

A module for the margin ranking loss. |

|

A module for the mean square error loss. |

|

An implementation of the self-adversarial negative sampling loss function proposed by [sun2019]. |

|

A module for the softplus loss. |

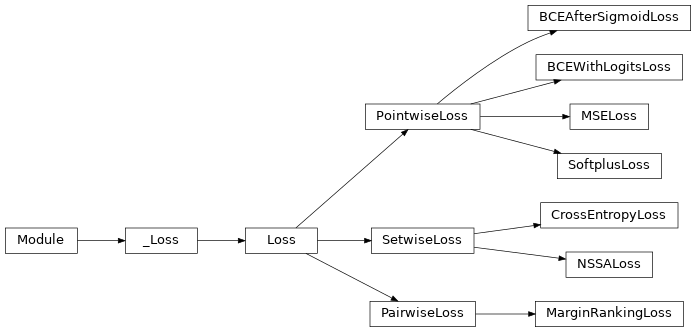

Class Inheritance Diagram¶