Training¶

Stochastic Local Closed World Assumption¶

- class SLCWATrainingLoop(model, triples_factory, optimizer=None, negative_sampler=None, negative_sampler_kwargs=None, automatic_memory_optimization=True)[source]¶

A training loop that uses the stochastic local closed world assumption training approach.

Initialize the training loop.

- Parameters

model (

Model) – The model to traintriples_factory (

CoreTriplesFactory) – The triples factory to train overoptimizer (

Optional[Optimizer]) – The optimizer to use while training the modelnegative_sampler (

Union[str,NegativeSampler,Type[NegativeSampler],None]) – The class, instance, or name of the negative samplernegative_sampler_kwargs (

Optional[Mapping[str,Any]]) – Keyword arguments to pass to the negative sampler class on instantiation for every positive oneautomatic_memory_optimization (

bool) – Whether to automatically optimize the sub-batch size during training and batch size during evaluation with regards to the hardware at hand.

- batch_size_search(*, triples_factory, training_instances, batch_size=None)¶

Find the maximum batch size for training with the current setting.

This method checks how big the batch size can be for the current model with the given training data and the hardware at hand. If possible, the method will output the determined batch size and a boolean value indicating that this batch size was successfully evaluated. Otherwise, the output will be batch size 1 and the boolean value will be False.

- Parameters

triples_factory (

CoreTriplesFactory) – The triples factory over which search is runtraining_instances (

Instances) – The training instances generated from the triples factorybatch_size (

Optional[int]) – The batch size to start the search with. If None, set batch_size=num_triples (i.e. full batch training).

- Return type

- Returns

Tuple containing the maximum possible batch size as well as an indicator if the evaluation with that size was successful.

- property checksum: str¶

The checksum of the model and optimizer the training loop was configured with.

- Return type

- property device¶

The device used by the model.

- property loss¶

The loss used by the model.

- sub_batch_and_slice(*, batch_size, sampler, triples_factory, training_instances)¶

Check if sub-batching and/or slicing is necessary to train the model on the hardware at hand.

- train(triples_factory, num_epochs=1, batch_size=None, slice_size=None, label_smoothing=0.0, sampler=None, continue_training=False, only_size_probing=False, use_tqdm=True, use_tqdm_batch=True, tqdm_kwargs=None, stopper=None, result_tracker=None, sub_batch_size=None, num_workers=None, clear_optimizer=False, checkpoint_directory=None, checkpoint_name=None, checkpoint_frequency=None, checkpoint_on_failure=False, drop_last=None, callbacks=None)¶

Train the KGE model.

- Parameters

triples_factory (

CoreTriplesFactory) – The training triples.num_epochs (

int) – The number of epochs to train the model.batch_size (

Optional[int]) – If set the batch size to use for mini-batch training. Otherwise find the largest possible batch_size automatically.slice_size (

Optional[int]) – >0 The divisor for the scoring function when using slicing. This is only possible for LCWA training loops in general and only for models that have the slicing capability implemented.label_smoothing (

float) – (0 <= label_smoothing < 1) If larger than zero, use label smoothing.sampler (

Optional[str]) – (None or ‘schlichtkrull’) The type of sampler to use. At the moment sLCWA in R-GCN is the only user of schlichtkrull sampling.continue_training (

bool) – If set to False, (re-)initialize the model’s weights. Otherwise continue training.only_size_probing (

bool) – The evaluation is only performed for two batches to test the memory footprint, especially on GPUs.use_tqdm (

bool) – Should a progress bar be shown for epochs?use_tqdm_batch (

bool) – Should a progress bar be shown for batching (inside the epoch progress bar)?tqdm_kwargs (

Optional[Mapping[str,Any]]) – Keyword arguments passed totqdmmanaging the progress bar.stopper (

Optional[Stopper]) – An instance ofpykeen.stopper.EarlyStopperwith settings for checking if training should stop earlyresult_tracker (

Optional[ResultTracker]) – The result tracker.sub_batch_size (

Optional[int]) – If provided split each batch into sub-batches to avoid memory issues for large models / small GPUs.num_workers (

Optional[int]) – The number of child CPU workers used for loading data. If None, data are loaded in the main process.clear_optimizer (

bool) – Whether to delete the optimizer instance after training (as the optimizer might have additional memory consumption due to e.g. moments in Adam).checkpoint_directory (

Union[None,str,Path]) – An optional directory to store the checkpoint files. If None, a subdirectory namedcheckpointsin the directory defined bypykeen.constants.PYKEEN_HOMEis used. Unless the environment variablePYKEEN_HOMEis overridden, this will be~/.pykeen/checkpoints.checkpoint_name (

Optional[str]) – The filename for saving checkpoints. If the given filename exists already, that file will be loaded and used to continue training.checkpoint_frequency (

Optional[int]) – The frequency of saving checkpoints in minutes. Setting it to 0 will save a checkpoint after every epoch.checkpoint_on_failure (

bool) – Whether to save a checkpoint in cases of a RuntimeError or MemoryError. This option differs from ordinary checkpoints, since ordinary checkpoints are only saved after a successful epoch. When saving checkpoints due to failure of the training loop there is no guarantee that all random states can be recovered correctly, which might cause problems with regards to the reproducibility of that specific training loop. Therefore, these checkpoints are saved with a distinct checkpoint name, which will bePyKEEN_just_saved_my_day_{datetime}.ptin the given checkpoint_root.drop_last (

Optional[bool]) – Whether to drop the last batch in each epoch to prevent smaller batches. Defaults to False, except if the model contains batch normalization layers. Can be provided explicitly to override.callbacks (

Union[None,TrainingCallback,Collection[TrainingCallback]]) – An optionalpykeen.training.TrainingCallbackor collection of callback instances that define one of several functionalities. Their interface was inspired by Keras.

- Return type

- Returns

The losses per epoch.

Local Closed World Assumption¶

- class LCWATrainingLoop(model, triples_factory, optimizer=None, automatic_memory_optimization=True)[source]¶

A training loop that uses the local closed world assumption training approach.

Initialize the training loop.

- Parameters

model (

Model) – The model to traintriples_factory (

CoreTriplesFactory) – The training triples factoryoptimizer (

Optional[Optimizer]) – The optimizer to use while training the modelautomatic_memory_optimization (

bool) – bool Whether to automatically optimize the sub-batch size during training and batch size during evaluation with regards to the hardware at hand.

- batch_size_search(*, triples_factory, training_instances, batch_size=None)¶

Find the maximum batch size for training with the current setting.

This method checks how big the batch size can be for the current model with the given training data and the hardware at hand. If possible, the method will output the determined batch size and a boolean value indicating that this batch size was successfully evaluated. Otherwise, the output will be batch size 1 and the boolean value will be False.

- Parameters

triples_factory (

CoreTriplesFactory) – The triples factory over which search is runtraining_instances (

Instances) – The training instances generated from the triples factorybatch_size (

Optional[int]) – The batch size to start the search with. If None, set batch_size=num_triples (i.e. full batch training).

- Return type

- Returns

Tuple containing the maximum possible batch size as well as an indicator if the evaluation with that size was successful.

- property checksum: str¶

The checksum of the model and optimizer the training loop was configured with.

- Return type

- property device¶

The device used by the model.

- property loss¶

The loss used by the model.

- sub_batch_and_slice(*, batch_size, sampler, triples_factory, training_instances)¶

Check if sub-batching and/or slicing is necessary to train the model on the hardware at hand.

- train(triples_factory, num_epochs=1, batch_size=None, slice_size=None, label_smoothing=0.0, sampler=None, continue_training=False, only_size_probing=False, use_tqdm=True, use_tqdm_batch=True, tqdm_kwargs=None, stopper=None, result_tracker=None, sub_batch_size=None, num_workers=None, clear_optimizer=False, checkpoint_directory=None, checkpoint_name=None, checkpoint_frequency=None, checkpoint_on_failure=False, drop_last=None, callbacks=None)¶

Train the KGE model.

- Parameters

triples_factory (

CoreTriplesFactory) – The training triples.num_epochs (

int) – The number of epochs to train the model.batch_size (

Optional[int]) – If set the batch size to use for mini-batch training. Otherwise find the largest possible batch_size automatically.slice_size (

Optional[int]) – >0 The divisor for the scoring function when using slicing. This is only possible for LCWA training loops in general and only for models that have the slicing capability implemented.label_smoothing (

float) – (0 <= label_smoothing < 1) If larger than zero, use label smoothing.sampler (

Optional[str]) – (None or ‘schlichtkrull’) The type of sampler to use. At the moment sLCWA in R-GCN is the only user of schlichtkrull sampling.continue_training (

bool) – If set to False, (re-)initialize the model’s weights. Otherwise continue training.only_size_probing (

bool) – The evaluation is only performed for two batches to test the memory footprint, especially on GPUs.use_tqdm (

bool) – Should a progress bar be shown for epochs?use_tqdm_batch (

bool) – Should a progress bar be shown for batching (inside the epoch progress bar)?tqdm_kwargs (

Optional[Mapping[str,Any]]) – Keyword arguments passed totqdmmanaging the progress bar.stopper (

Optional[Stopper]) – An instance ofpykeen.stopper.EarlyStopperwith settings for checking if training should stop earlyresult_tracker (

Optional[ResultTracker]) – The result tracker.sub_batch_size (

Optional[int]) – If provided split each batch into sub-batches to avoid memory issues for large models / small GPUs.num_workers (

Optional[int]) – The number of child CPU workers used for loading data. If None, data are loaded in the main process.clear_optimizer (

bool) – Whether to delete the optimizer instance after training (as the optimizer might have additional memory consumption due to e.g. moments in Adam).checkpoint_directory (

Union[None,str,Path]) – An optional directory to store the checkpoint files. If None, a subdirectory namedcheckpointsin the directory defined bypykeen.constants.PYKEEN_HOMEis used. Unless the environment variablePYKEEN_HOMEis overridden, this will be~/.pykeen/checkpoints.checkpoint_name (

Optional[str]) – The filename for saving checkpoints. If the given filename exists already, that file will be loaded and used to continue training.checkpoint_frequency (

Optional[int]) – The frequency of saving checkpoints in minutes. Setting it to 0 will save a checkpoint after every epoch.checkpoint_on_failure (

bool) – Whether to save a checkpoint in cases of a RuntimeError or MemoryError. This option differs from ordinary checkpoints, since ordinary checkpoints are only saved after a successful epoch. When saving checkpoints due to failure of the training loop there is no guarantee that all random states can be recovered correctly, which might cause problems with regards to the reproducibility of that specific training loop. Therefore, these checkpoints are saved with a distinct checkpoint name, which will bePyKEEN_just_saved_my_day_{datetime}.ptin the given checkpoint_root.drop_last (

Optional[bool]) – Whether to drop the last batch in each epoch to prevent smaller batches. Defaults to False, except if the model contains batch normalization layers. Can be provided explicitly to override.callbacks (

Union[None,TrainingCallback,Collection[TrainingCallback]]) – An optionalpykeen.training.TrainingCallbackor collection of callback instances that define one of several functionalities. Their interface was inspired by Keras.

- Return type

- Returns

The losses per epoch.

Base Classes¶

- class TrainingLoop(model, triples_factory, optimizer=None, automatic_memory_optimization=True)[source]¶

A training loop.

Initialize the training loop.

- Parameters

model (

Model) – The model to traintriples_factory (

CoreTriplesFactory) – The training triples factoryoptimizer (

Optional[Optimizer]) – The optimizer to use while training the modelautomatic_memory_optimization (

bool) – bool Whether to automatically optimize the sub-batch size during training and batch size during evaluation with regards to the hardware at hand.

- batch_size_search(*, triples_factory, training_instances, batch_size=None)[source]¶

Find the maximum batch size for training with the current setting.

This method checks how big the batch size can be for the current model with the given training data and the hardware at hand. If possible, the method will output the determined batch size and a boolean value indicating that this batch size was successfully evaluated. Otherwise, the output will be batch size 1 and the boolean value will be False.

- Parameters

triples_factory (

CoreTriplesFactory) – The triples factory over which search is runtraining_instances (

Instances) – The training instances generated from the triples factorybatch_size (

Optional[int]) – The batch size to start the search with. If None, set batch_size=num_triples (i.e. full batch training).

- Return type

- Returns

Tuple containing the maximum possible batch size as well as an indicator if the evaluation with that size was successful.

- property checksum: str¶

The checksum of the model and optimizer the training loop was configured with.

- Return type

- property device¶

The device used by the model.

- classmethod get_normalized_name()[source]¶

Get the normalized name of the training loop.

- Return type

- property loss¶

The loss used by the model.

- sub_batch_and_slice(*, batch_size, sampler, triples_factory, training_instances)[source]¶

Check if sub-batching and/or slicing is necessary to train the model on the hardware at hand.

- train(triples_factory, num_epochs=1, batch_size=None, slice_size=None, label_smoothing=0.0, sampler=None, continue_training=False, only_size_probing=False, use_tqdm=True, use_tqdm_batch=True, tqdm_kwargs=None, stopper=None, result_tracker=None, sub_batch_size=None, num_workers=None, clear_optimizer=False, checkpoint_directory=None, checkpoint_name=None, checkpoint_frequency=None, checkpoint_on_failure=False, drop_last=None, callbacks=None)[source]¶

Train the KGE model.

- Parameters

triples_factory (

CoreTriplesFactory) – The training triples.num_epochs (

int) – The number of epochs to train the model.batch_size (

Optional[int]) – If set the batch size to use for mini-batch training. Otherwise find the largest possible batch_size automatically.slice_size (

Optional[int]) – >0 The divisor for the scoring function when using slicing. This is only possible for LCWA training loops in general and only for models that have the slicing capability implemented.label_smoothing (

float) – (0 <= label_smoothing < 1) If larger than zero, use label smoothing.sampler (

Optional[str]) – (None or ‘schlichtkrull’) The type of sampler to use. At the moment sLCWA in R-GCN is the only user of schlichtkrull sampling.continue_training (

bool) – If set to False, (re-)initialize the model’s weights. Otherwise continue training.only_size_probing (

bool) – The evaluation is only performed for two batches to test the memory footprint, especially on GPUs.use_tqdm (

bool) – Should a progress bar be shown for epochs?use_tqdm_batch (

bool) – Should a progress bar be shown for batching (inside the epoch progress bar)?tqdm_kwargs (

Optional[Mapping[str,Any]]) – Keyword arguments passed totqdmmanaging the progress bar.stopper (

Optional[Stopper]) – An instance ofpykeen.stopper.EarlyStopperwith settings for checking if training should stop earlyresult_tracker (

Optional[ResultTracker]) – The result tracker.sub_batch_size (

Optional[int]) – If provided split each batch into sub-batches to avoid memory issues for large models / small GPUs.num_workers (

Optional[int]) – The number of child CPU workers used for loading data. If None, data are loaded in the main process.clear_optimizer (

bool) – Whether to delete the optimizer instance after training (as the optimizer might have additional memory consumption due to e.g. moments in Adam).checkpoint_directory (

Union[None,str,Path]) – An optional directory to store the checkpoint files. If None, a subdirectory namedcheckpointsin the directory defined bypykeen.constants.PYKEEN_HOMEis used. Unless the environment variablePYKEEN_HOMEis overridden, this will be~/.pykeen/checkpoints.checkpoint_name (

Optional[str]) – The filename for saving checkpoints. If the given filename exists already, that file will be loaded and used to continue training.checkpoint_frequency (

Optional[int]) – The frequency of saving checkpoints in minutes. Setting it to 0 will save a checkpoint after every epoch.checkpoint_on_failure (

bool) – Whether to save a checkpoint in cases of a RuntimeError or MemoryError. This option differs from ordinary checkpoints, since ordinary checkpoints are only saved after a successful epoch. When saving checkpoints due to failure of the training loop there is no guarantee that all random states can be recovered correctly, which might cause problems with regards to the reproducibility of that specific training loop. Therefore, these checkpoints are saved with a distinct checkpoint name, which will bePyKEEN_just_saved_my_day_{datetime}.ptin the given checkpoint_root.drop_last (

Optional[bool]) – Whether to drop the last batch in each epoch to prevent smaller batches. Defaults to False, except if the model contains batch normalization layers. Can be provided explicitly to override.callbacks (

Union[None,TrainingCallback,Collection[TrainingCallback]]) – An optionalpykeen.training.TrainingCallbackor collection of callback instances that define one of several functionalities. Their interface was inspired by Keras.

- Return type

- Returns

The losses per epoch.

Callbacks¶

Training callbacks.

Training callbacks allow for arbitrary extension of the functionality of the pykeen.training.TrainingLoop

without subclassing it. Each callback instance has a loop attribute that allows access to the parent training

loop and all of its attributes, including the model. The interaction points are similar to those of

Keras.

Examples¶

It was suggested in Issue #333 that it might be useful to log all batch losses. This could be accomplished with the following:

from pykeen.training import TrainingCallback

class BatchLossReportCallback(TrainingCallback):

def on_batch(self, epoch: int, batch, batch_loss: float):

print(epoch, batch_loss)

Classes¶

An interface for training callbacks. |

|

|

An adapter for the |

|

A wrapper for calling multiple training callbacks together. |

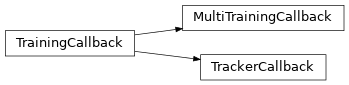

Class Inheritance Diagram¶